Teacher moderation that contributes to effective teaching

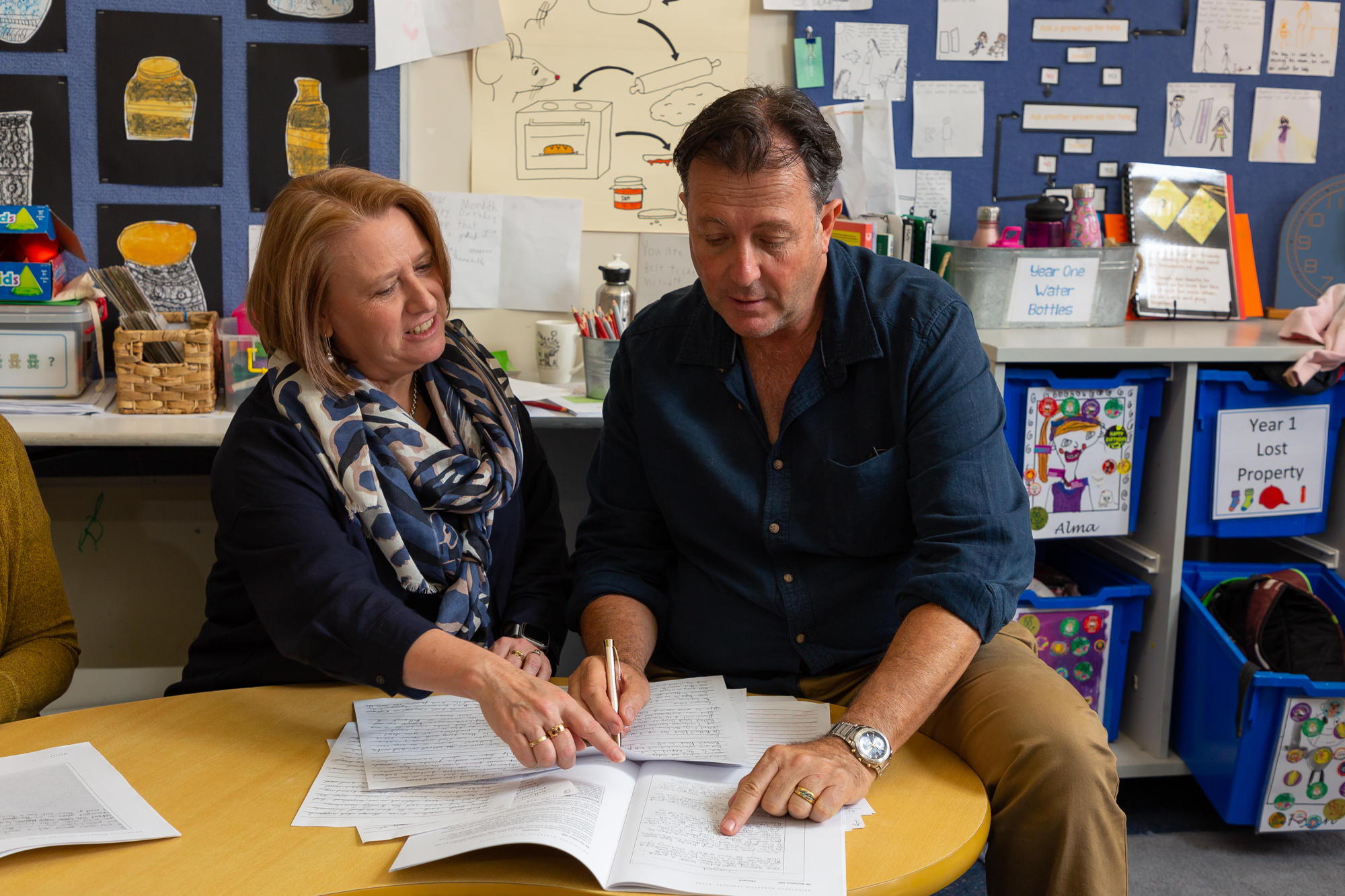

Moderation is demanding and time consuming, yet it often leads to some of the most meaningful discussions about student learning that teachers have.

I would like to share with you the work we are doing to support teachers with moderation of students’ extended writing performances. I will start with a short discussion of how we use the term ‘moderation’ in Australian schools.

Moderation in the Australian school context

Australian departmental policy documents typically describe moderation as, the practice of teachers or students sharing and developing their understanding of what learning looks like by examining examples of different types and quality of students’ work and comparing these with formal standards and success criteria.

Some time ago I was asked to draft the assessment principles for inclusion in the Western Australian curriculum. In the supporting documents, I made a distinction between Moderation for Reporting and Moderation for Learning.

I described Moderation for Reporting as ‘enabling teachers to develop consistent judgements of student performance’ and I explained ‘it is closely aligned with the summative purposes of assessment.’ Moderation for Learning, I explained, ‘supports teachers within and across schools in developing shared understandings of students’ learning and shared expectations of student performance. It is closely aligned with the formative purposes of assessment.’ I went further to explain, ‘Moderation for Learning focuses on teachers working together to reach more in-depth understandings of their students’ learning …. When moderation processes focus on learning, they support teachers in refining their understandings of what their students know and what they need to learn next. In this context, the moderation process should use fine-grained information about specific aspects of learning.’

Since drafting the Western Australian assessment principles, we have greatly progressed our research around supporting teacher moderation and we have developed the Brightpath software. Whilst I think it is still useful to delineate Moderation for Reporting and Moderation for Learning, as this distinction helps to delineate the different purposes of moderation, I have come to understand more fully that the assessment process we have developed in Brightpath readily supports both Moderation for Learning and Moderation for Reporting. I will come back to this shortly, but firstly I want to discuss why moderation is important.

Role of moderation in effective teaching

I want to discuss the measurement concepts of reliability and validity. Please bear with me, but this discussion is important.

The concepts of reliability and validity are relevant for all assessments but are more often discussed in relation to standardised testing. In case it has been a while since you thought about these concepts, reliability refers to the consistency of outcomes that would be observed from an assessment if the process is repeated. Discussion of reliability in the context of teachers’ assessments or judgements of their students’ performance relates to the generalizability of scores across markers or teachers. That is, did teachers provide similar scores or marks for a given student performance, or similar scores for performances of a similar standard? Policy documents use terms such as consistency or dependability or moderation as a proxy for the term reliability.

Validity refers the extent to which the scores from a measure represent the variable they are intended to. Put more simply, the test assesses what it purports to assess and not some other spurious factor or factors. For example, a maths tests assesses maths ability and the speed at which students work does not hinder their ability to demonstrate their maths understandings.

I have recently come to appreciate that reliability is best thought of as an aspect of validity thanks to Paul Black and Dylan Wiliam’s discussion in a chapter in the text, Assessment and Learning. I will explain their discussion of the relationship between validity and reliability, as best I can, by way of a scenario. Two teachers score a common set of 25 performances and their rank ordering of the performances differs quite a bit. In such a case we could comfortably say that their scoring has not been consistent or reliable. We can also say, however, that the different rank ordering suggests issues with the validity of the assessments. As student writing ability increases the scores should increase. Very different rank ordering of performances by the teachers would suggest that they have differing understandings of what it means to be a better writer. Perhaps one of the teachers focused too much on the students’ spelling and this focus stopped the teacher from correctly assessing the authorial aspects of the writing. In essence, this teacher is assessing development in spelling, not development in writing.

You may well be asking yourself the relevance of the above discussion to the role of moderation in effective teaching. We know that moderation is important when reporting on student achievement, but I believe it has a more important, and unacknowledged role, in effective teaching. If teachers’ assessments have low validity than it is likely that they have not successfully identified where their students are in their learning and they are not teaching to their students’ points of need. I return to the scenario of a teacher focussing on students’ spelling when assessing her their writing and not correctly assessing the authorial aspects of their writing. It is highly probable that because this teacher has not adequately identified where her students are in terms of writing development, her teaching is not correctly targeted to what her students need to learn next to become more effective writers.

Supporting teacher moderation

This brings me to explaining how we support teacher moderation of student writing. I believe that Brightpath supports a robust moderation process, and in doing so supports both moderation for reporting and moderation for learning.

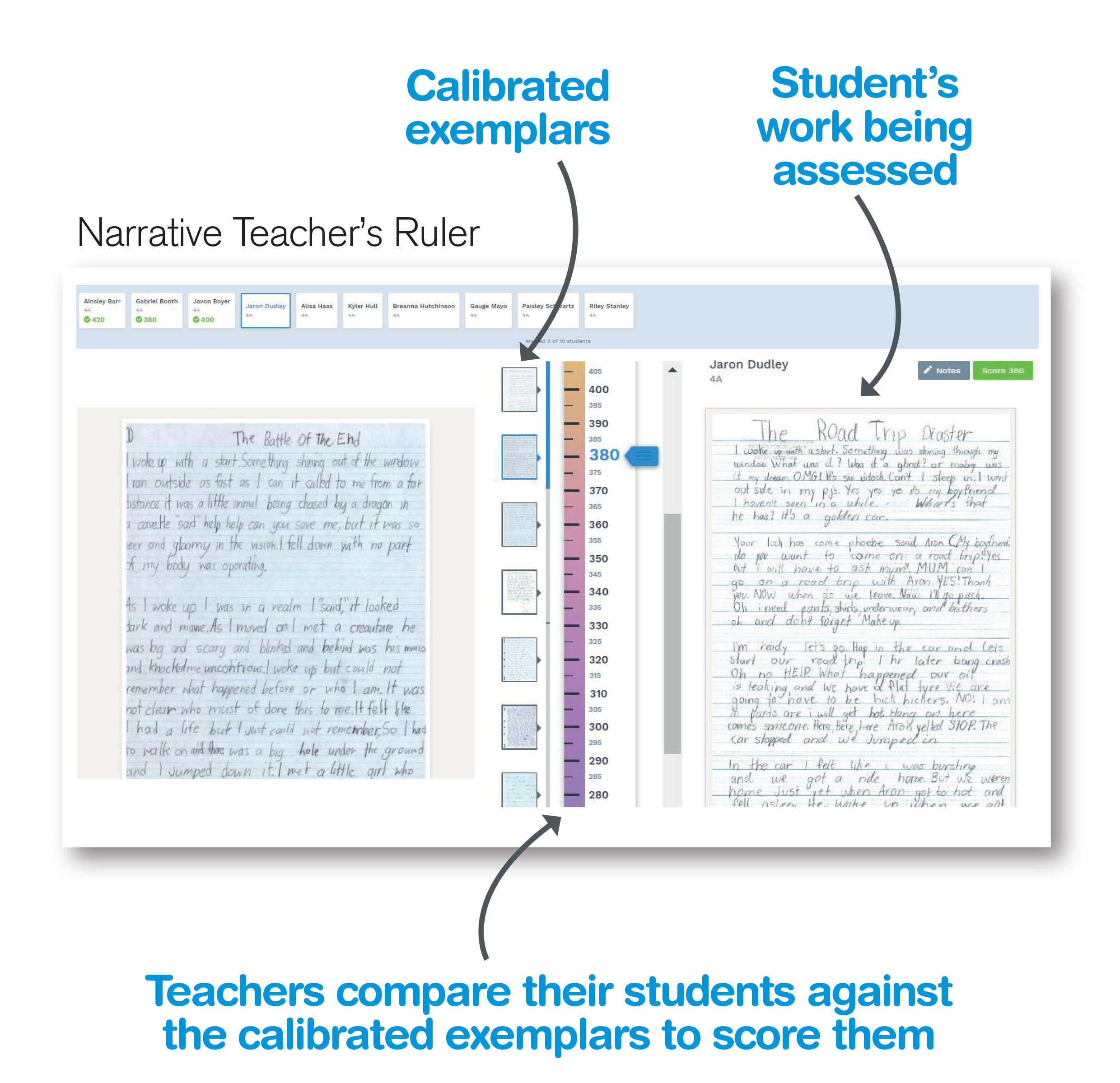

In Brightpath, teachers assess their students’ writing performances by making an on-balance judgements of their students’ writing, based on their analysis of the strengths and weaknesses of those performances, and determining which exemplar each performance is closest to or which two exemplars a performance falls between. The assessment process is represented graphically in Figure 1.

Figure 1: Explanation of how teachers assess writing within Brightpath

We have completed numerous studies to check the reliability of teacher judgements when using the assessment process in Brightpath. To do this we ask groups of teachers to score a common set of performances. Table 1 shows the data from one such study. The rater-average correlation shows the correlation between each rater’s/teacher’s scores and the average scores of all other teachers. A score of 1.0 would mean the teachers were in complete agreement in their scoring of the performances. As you can see the teachers were in very close agreement about the relative ordering of the performances in this study and this finding has been replicated in numerous other studies.

Table 1: Interrater reliability and harshness indicators

| Judge | Rater-average correlation | Harshness |

|---|---|---|

| J1 | 0.973 | 10.6 |

| J2 | 0.951 | 9.0 |

| J3 | 0.913 | 18.8 |

| J4 | 0.939 | -2.9 |

| J5 | 0.939 | -4.6 |

| J6 | 0.975 | 11.7 |

| J7 | 0.878 | 6.1 |

| J8 | 0.813 | -20.1 |

| J9 | 0.919 | 6.3 |

| J10 | 0.936 | -7.1 |

| J11 | 0.969 | 0.3 |

| J12 | 0.943 | -7.1 |

| J13 | 0.912 | 15.9 |

| J14 | 0.893 | -1.1 |

| J15 | 0.977 | 11.0 |

| J16 | 0.948 | 4.3 |

| J17 | 0.948 | -2.2 |

| J18 | 0.967 | 13.0 |

| J19 | 0.948 | 5.4 |

| J20 | 0.865 | -1.1 |

| J21 | 0.907 | -17.6 |

| J22 | 0.938 | 19.5 |

| J23 | 0.892 | 10.3 |

| J24 | 0.894 | -29.4 |

| J25 | 0.893 | -22.5 |

| J26 | 0.935 | 7.2 |

| J27 | 0.944 | -13.1 |

| J28 | 0.920 | 19.0 |

| J29 | 0.940 | -4.4 |

| J30 | 0.933 | -31.0 |

| J31 | 0.935 | -3.1 |

| J32 | 0.913 | -9.1 |

| J33 | 0.848 | -10.9 |

| J34 | 0.971 | 0.9 |

| J35 | 0.893 | -19.4 |

| J36 | 0.968 | 9.7 |

| J37 | 0.967 | 27.5 |

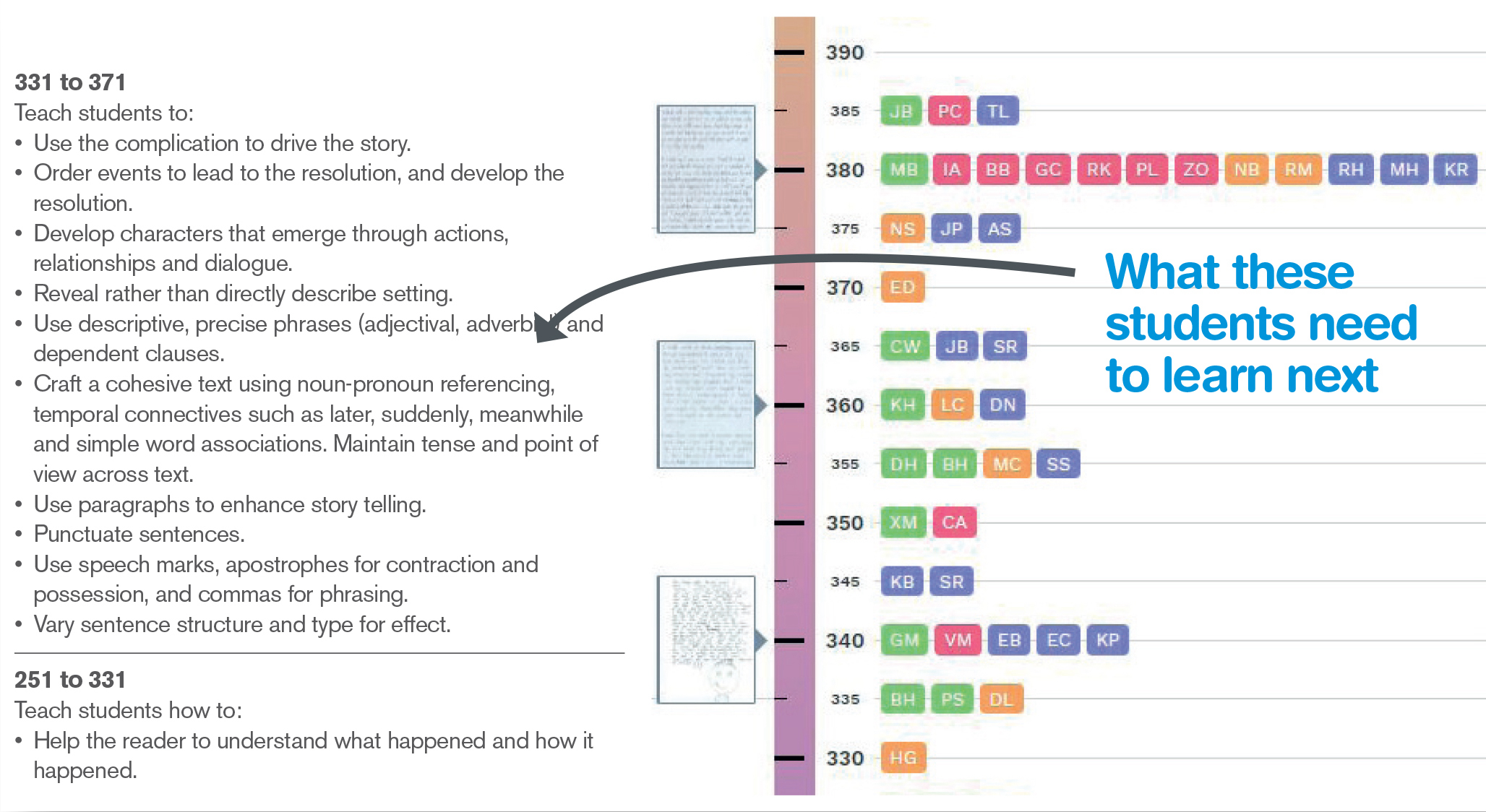

We continue to check the reliability of teachers’ assessments when they use Brightpath as reliable scoring is fundamental to them using the data to inform their teaching. Figure 2 shows the report that we provide to guide teachers as to what to teach their students next, based on how they scored their students. This information is obviously of limited value if the teachers have not placed their students correctly on the Brightpath scale.

Figure 2: Extract of the Brightpath teaching points display

Validity of Brightpath Scale

-

Does Brightpath provide valid assessments of students’ writing?

-

Does using Brightpath to assess students lead to more effective teaching?

To check the concurrent validity of the Brightpath scale we had expert markers mark a set of performances twice: once using the NAPLAN (The Australian National Assessment) guide and once using the Brightpath calibrated examples. We obtained a high correlation indicating that teachers’ judgements of writing using Brightpath are valid in as much as the marking the writing using the NAPLAN guide provides evidence of its validity. More broadly, ACARA (the Australian Curriculum and Standards Authority) used the Brightpath exemplars and performance descriptors to inform the drafting of the Australian learning progressions.

This leaves the question as to whether using Brightpath leads to more effective teaching. Findings from a study conducted independent of us, are promising. The study found that students in high-usage Brightpath schools progressed substantially more than students in schools not using Brightpath, specifically an additional 3 months during the period between years 3 and 5.

A further, and significant, breakthrough in how we support teacher moderation

I started this short discussion with my observation that moderation is demanding and time consuming, yet it often leads to some of the most meaningful discussions about student learning. Motivated by desire to recover time for teachers, we have researched a way of providing automated marking that saves teachers time but does not jeopardise the benefits of teachers assessing their own students and working with colleagues to moderate their assessments. This short video we show you the result of this research and you will see the new Brightpath Marking Assistant in action.

We have come a long way, and as with all our work, we will continue to research and refine our support for teachers so that we can help them use Brightpath in ways that contribute to effective teaching.

References

Black, P., and Wiliam, D. (2012). “The reliability of assessments,” in Assessment and Learning, 2nd Edn, ed J. Gardner (London: Sage Publications Ltd.), 243–263.

Humphry, S. M., and Heldsinger, S. (2020). A two-stage method for obtaining reliable teacher assessments of writing. Frontiers in Education (Available here https://www.frontiersin.org/article/10.3389/feduc.2020.00006).